by Ian Mann

March 03, 2023

Guest contributor Peter Cole interviews jazz engineer and lecturer Dave Clements, the inventor of 'Robot Glasper'.

Peter Cole writes;

I’m a jazz pianist, vocalist, arranger and composer. I have lectured at Middlesex and Trinity College of Music.

I’ve written an interview, below, with jazz engineer and lecturer Dave Clements.

It’s about a soloing ‘jazzbot’ he has created that can improvise its own solos. I think people would be amused and interested to know about it.

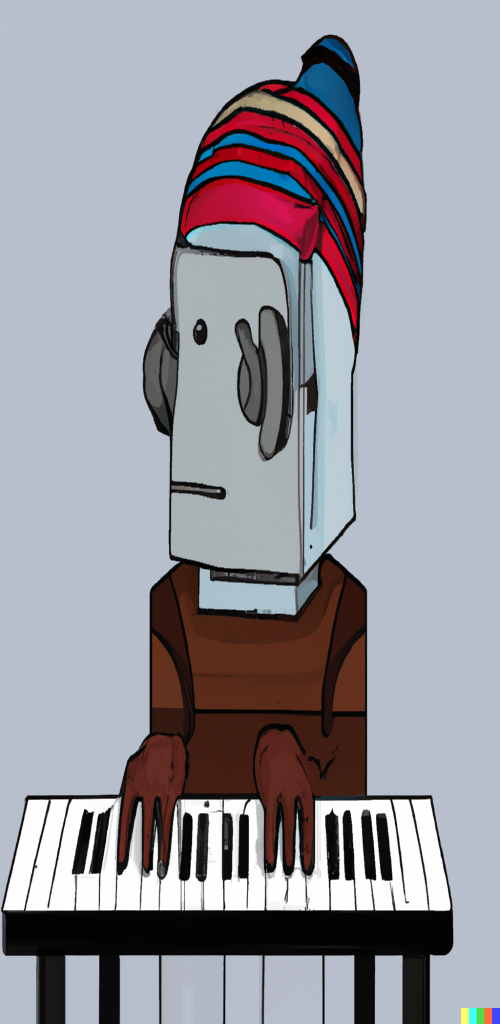

Meet ‘Robot Glasper’: A ‘Jazzbot’ Who Invents His Own Solos

Sample Link: https://youtu.be/al8lYziiYLs

By Peter Cole

“Why would I ever want to jam with a machine?’, I once heard Wynton Marsalis remark, when asked if future jazz musicians might ever want to play with computers rather than humans.

But he hadn’t met ‘Robot Glasper’.

The humorously-named ‘jazzbot’, named after the contemporary jazz pianist, is an algorithm-based software who improvises his own solos spontaneously.

How does it work? Depending on what sort of a solo you’re in the mood for, you can turn his dials to incorporate as much or as little of the techniques you like: triplets, chromaticism, enclosures, blues. According to preference, you will be able to programme him to burn up the keyboard like Oscar Peterson or hang back like, well, like Robert Glasper.

Dave Clements, its designer, is a lecturer at Middlesex University, sound engineer, and programmer who is fascinated by jazz. I sat down with Dave to find out what his creation can do and if it’s here to put jazz musicians out of the few jobs we have left.

How did you come up with the idea for a soloing jazzbot? How much work did it take?

It all started when I began wondering just how possible a soloing jazzbot really was! It’s been a lot of work. I’ve been tinkering with it on and off for two years. There are a few intricacies I haven’t fixed, and I always get new ideas. It will probably never end.

How is this different to a backing track, or to other software of its kind? What makes Robot Glasper unique?

Backing track apps like iRealPro will perform the harmony for you, with some decisions about the voice leading, rhythm and bassline, but they don’t tend to actually improvise solos. Robot Glasper works entirely in real time, making decisions in the moment based on what it just did. This is different to other programmes, such as Robert Keller’s Impro-visor, which thinks about the whole solo and prints it out before performing. My robot genuinely doesn’t know what it’s going to do next until it happens.

What’s remarkable is that, for a robot, he doesn’t actually sound that square. In fact, since he invents rather than playing set licks and phrases, he sounds like he’s trying to be hip! How exactly does he decide what to do next?

It’s all based on probabilities. Whatever it just did makes certain outcomes more or less likely. So, for example, if he begins a triplet group, then the likelihood of completing that suddenly becomes much more likely. Or if he does something unusual, like playing chromatic note on a weak beat, he will feel the need to resolve that in a strong way — or he can keep delaying the resolution, it’s just less likely. There are a ton of little rules like this that make it function.

It’s all based on a mathematical concept called Markov chains, where future events are based on the past, and that’s maybe his biggest limitation. Unlike a human, Robot Glasper doesn’t decide on a future place and find a way to get there — he’s always basing choices on what happened before. The future only exists as probabilities.

‘The future only exists as probabilities’—I think that really describes some of the joy of improvising. What uses do you think robots like this might have for jazz musicians, teachers and students? Despite what Wynton says, will we want to play with them?

Yes, I think jazzbots are going to become more commonplace. Following a robot’s solo is just going to be really useful for ‘comping’, improvised accompaniment. My big hope is that it will create more inspiration for our own solos. Human musicians suffer from ‘familiarity bias’: we naturally lean towards what we already know, and that makes it hard to get fresh ideas. That’s why I’ve kept the boundaries hazy and given my robot the constant possibility of new directions.

So, your robot sounds more human if he makes mistakes?

Exactly. The more you take away his ability to do ‘un-jazzy’ things, melodic lines that don’t ‘work’ musically, the more you take his choices away, and make him sound formulaic and dull instead of spontaneous and unpredictable. Just like any real musician, he comes out with some weak stuff, but then might play some genuinely interesting lines that a human player, in their limited imagination, just might not think of. If you’re bored of what you play over a certain progression, you can let him have a go, and of course, he might give you a few bad takes. But the other takes might be more interesting and unexpected for having unusual or strange moments in them.

Are there things robots still can’t copy, like groove, time-feel, or swing?

Well, swing is relatively easy to copy, just in a mathematical sense — it’s just a way of dividing up the beat. But when we talk about real swing, we’re talking about more than just that: as you say, time-feel, groove. There are all sorts of little irregularities in human playing that actually make it sound human, and they’re a lot harder to emulate. You can program a certain amount of push and pull, but a robot solo will always come out different to a natural performance, which has more reason to do those variations (?) even if the player isn’t consciously thinking of them. What I meant by this is that when a human drags or rushes time it’s because they’ve got an idea of how they want that phrase to sound, just randomly dragging or rushing based on probability doesn’t have the same effect.

If you remember the film The Matrix, the agents are flawless, but beatable, because they’re based on rules. That’s exactly how algorithms work: if you can describe it to them as a rule or set of rules, that’s how they understand it. But some aspects of playing become so complex, with so many variables, that they’re hard to describe in terms of rules. Have you ever sat down and tried to think about why Miles Davis plays certain notes at certain velocities at certain points of a solo? Could we even describe that as a set of rules? That’s why Miles isn’t in any danger just yet: you’d need the robot to understand concepts like energy, emotion and tension. Who knows if perhaps those things could one day be described in rule terms.

One way to achieve something along those lines is through machine learning, or AI, where the jazzbot makes a ‘pastiche’ based on the material it studies, but that wasn’t what I was trying to create. I wanted something that doesn’t only pastiche, but could potentially develop what we think of as ‘jazz language’. Another difficulty is getting it to think about a solo in a bigger-picture way. Robot Glasper is pretty good at creating lines and phrases, but less good at thinking about how those lines and phrases fit together to create a wider narrative. I’m working on solving this next!

Did you use any particular references in creating Robot Glasper? Such as particular artists or music?

I was interested in Line Up by Tristano. Obviously it’s a classic, but I was interested in how Tristano famously slowed down the accompaniment to record his own solo. It seemed like an interesting idea to take that limitation away because, of course, a computer can think really quickly.

In time will we eventually have a robot who can pass the ‘Turing Test’ of being indistinguishable from Art Tatum, say, or even one day, Miles Davis?

Artificial soloing is much easier to do on piano as there are fewer variables than for horns and it translates to digital easier. Pianists are always gigging with digital instruments even if they moan about it, but you don’t see that for sax because it’s much more complex than just what note you hit, when you hit it, and how hard. Maybe my robot will always sound like a robot, but that could be a valued thing in itself. It’s a strange goal to have someone sound indistinguishable from Miles. Perhaps that degree of exact similarity would have the opposite effect and convince a Miles enthusiast that it was in fact a robot!

The Turing test is usually when a machine tries to convince someone it’s a human, which is a wider brief than being a particular human. I think my programme could convince someone that it’s an unspecified jazz soloist. Of course, it depends who you’re trying to convince — perhaps the Turing Test equivalent here might be to convince another band member, such as the bass player or drummer, that they were playing with a human being. It would be an interesting experiment to blind test!

I’d like to try, especially if it could react to my own playing. Perhaps the Turing Test for the listener is if we no longer know or mind the difference. If a robot’s solo were good enough — or alive enough —that it inspires the same emotions in us as the playing of a real person, then perhaps it’s passed the test.

Where does the future lead next for jazz robots? Are we in competition with them or not? What future improvements will we make?

I’ve had a lot of fun pushing the program to see how far it can go, but I don’t really think of it as even the same world as a human soloist. I think AI and algorithms can be tools that help us, but only a human can ever truly put their lived experience into their art. Then again, AI art and driverless cars both seemed impossible not so long ago, so who knows? I think jamming with, and learning from, robots can help us become better players and to me that’s a good thing.

Interesting. Perhaps robots are here to help us learn how to be better humans!

Follow Peter Cole at at:

http://www.peterplaysmusic.co.uk

http://www.instagram.com/peterplaysmusic

http://www.facebook.com/peterplaysmusic

http://www.youtube.com/peterplaysmusic

http://www.soundcloud.com/peterplaysmusic

COMMENTS;

Ian adds;

This makes for fascinating reading and I’m grateful to Peter for allowing it to be shared on The Jazzmann.

Peter’s article remind me of “Garden of Robotic Unkraut”, the recent album from the Vienna based trio Blueblut. The recording features the sounds of the trio, Mark Holub (drums), Pamelia Stickney (theremin) and Chris Janka (guitar) plus the Totally Mechanized Midi-Orchestra, a collection of six mechanical musical instruments invented by Janka. effectively ‘musical robots’.

2021 saw the band collaborating with guitarist and software experimenter Nicola Hein who developed a customized artificial intelligence software that enabled the ‘robots’ of Janka’s TMMO to respond to the live musicians, effectively allowing them to improvise. The last five tracks on the new album are improvisations with the members of Blueblut interacting with Hein and the ‘robots’.

My review of “Garden of Robotic Unkraut”, which includes fuller details about the making of the album, can be found here;

https://www.thejazzmann.com/reviews/review/blueblut-garden-of-robotic-unkraut

More information on the Totally Mechanized Midi-Orchestra can be found at http://www.midi-orchestra.net

Blueblut website http://www.blueblut.net

blog comments powered by Disqus